Source From Here

Goal

主要介紹 Docker 的實作,會提到以下幾個部分:

在進入實作前,會簡單介紹 Docker 與虛擬化的差異、Docker 上的重要元件,接下來準備的部分會需要安裝 Docker 及先登入 Docker Hub。

簡介 Docker

Docker 是一個開源專案,支援多平台,從筆電到公、私有雲上能進行快速部署輕量、獨立的作業環境。Docker 使用 Linux 核心中的功能,Namespace 及 Control Groups (cgroups) 等,來達到建置獨立的環境及控制 CPU 、Memory 、網路等資源。

專案網址: http://www.docker.com/

Docker Container 與虛擬化的不同

在前面敘述有提到,Docker 能提供建置獨立的環境,但是運作的方式與虛擬化有所差別。

虛擬化

虛擬化通常都是透過在 Host OS 上安裝 hypervisor ,由 hypervisor 來管理不同虛擬主機,每個虛擬主機都需要安裝不同的作業系統。

Docker Container

Docker 提供應用程式在獨立的 container 中執行,這些 container 並不需要像虛擬化一樣額外依附在 hypervisor 或 guest OS 上,是透過 Docker Engine 來進行管理。

Docker 重要元件

在進入如何操作 Docker 前,先介紹 Docker 中的三個主要部分:

* Docker Images

* Docker Containers

* Docker Registries

操作 Docker 方法

Docker 主機上會運行 Docker daemon,也能開啟許多 container。 要對 Docker 進行操作,使用 Docker client,也就是 Docker 指令 (例如:docker pull, docker images ...) ,分別可以藉由:

對主機上的 Docker daemon 進行控制,當然 Docker client 與 Docker daemon 可以是同一台或不同主機上 。

更多 Docker 使用方法, 請參考 Docker Remote API

前置作業

接下來我們先在主機上安裝 Docker:

Ubuntu (doc)

CentOS7 (doc)

Mac OS X, Windows

在 Mac OS X 或 Windows 上需要安裝 Boot2Docker ,因為 Docker Engine 有用到 Linux 的特定功能,所以 Boot2Docker 會用 Virtualbox 建立 Linux VM ,在 Linux VM 上開啟 Docker daemon 由在 Mac OS X 或 Windows 的 Docker client 去操作Linux VM 上 Docker daemon (後面會在詳述)。Boot2Docker 安裝參考:

在前面有提到 Docker Hub 上提供了許多 images 可以使用,先註冊 Docker Hub 帳號,網址:https://hub.docker.com/account/signup/

接著可以利用 docker login 來登入 Docker Hub :

操作 Docker Container

建立一個新的 Container

先來暖身一下,啟動一個 CentOS 6 container,希望能執行指令來顯示今天的日期與時間。 使用 docker run 開啟一個新的 container:

輸出結果的第 1 行到第 5 行是,因為 docker 主機上原來並沒有 centos6 的 image 檔案,所以會先從 Docker Hub 下載。

輸出結果的最後一行就是執行的 date 指令。另外可以發現,如果扣除下載 image 時間,開啟一個 container 只要非常短的時間。

* docker run: 啟動一個新的 container

* centos:centos6

* /bin/date: 開啟 container 後執行的指令

docker run 還有其他參數可以進入 container 的終端機指令互動模式下:

該如何離開 container 的終端機?

1. 輸入 exit 或按 control/Ctrl + D,目前使用的這個 container 就會結束,而下次在開啟的 container 又是一個全新的。

2. control/Ctrl + P,再按 control/Ctrl + Q ,就可以跳離開這個 container 的 tty。

管理 Container

如果只是離開了 container 終端機, container 並沒有關閉或停止執行,所以接下來說明如何來管理 container。

Container ID

每一個 container 都有一個唯一的 CONTAINER ID,在上面的部分,執行 docker run -t -i centos:centos6 bash 開啟新的 container 後,會進入 container 的終端機內:

"d9485c95064b" 就是 CONTAINER ID,之後都是利用此 id 來分別不同的 container。

列出 Container

Docker client 提供了 docker ps 可以查看目前開啟且正在執行的 container

在 Container 中執行指令

離開了 container 的終端機後,並沒有將 container 關掉,可以用 docker exec 在 container 中執行指令,例如下面範例就是再回到 034972c95a2d container 的終端機:

停止 Container

想要讓在執行中的 container 停止,用 docker stop 停止執行中的 container :

開啟停止的 Container

開啟停止的 container 使用命令 docker start:

開啟後會執行一開始建立這個 container 的指令及參數,所以如果建立的時候,並沒有開啟終端機與互動模式,執行完指令後,container 又結束了。

刪除 Container

透過 docker ps -a 可以列出所有建立過且沒被刪除的 container: docker rm 刪除不需要的 container :

Container 內建立額外的掛載點

在 container 建立時,可以再建自訂的掛載點 (與外部檔案系統獨立):

共享主機與 Container 資料

Docker 也提供了可以與主機目錄共享的資料的功能,這在開發的時候蠻好用的,只要把專案目錄掛載到 container 內,就能直接在 container 測試、執行。我們將主機上的/home/aming/Docker/tutorial/ 目錄掛到 container 內的 /app 目錄:

管理 Docker Image

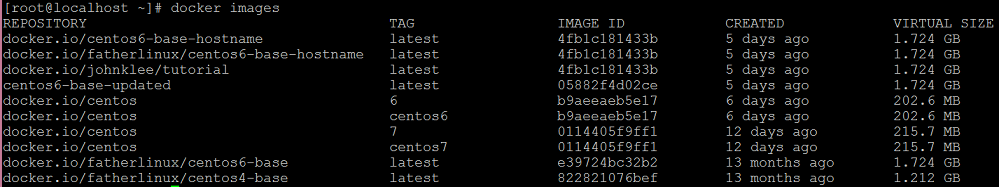

建立一個新的 Container 內有提到,使用 docker run 建立新的 container 時,如果 Docker 發現沒有符合的 image 預設會去 Docker Hub 下載,那我們該如何知道目前有哪些可用的 images ? docker images 可以列出主機內已經存在的 image:

可以看到前面所提到的 image 的「repo 名稱」、「tag 名稱」等資訊。

1. 搜尋 Images

我們可以使用命令 docker search 去搜尋 Docker Hub 上的 Images, 如 'tomcat':

2. 下載 Image

我們使用命令 docker pull 下載搜尋到的 " docker.io/consol/tomcat-7.0" :

想知道目前主機上有哪些 images 可以使用 docker images ,會列出主機內所有的 image:

3. 製作新的 Image

把剛才下載下來的 consol/tomcat-7.0 已經有 Tomcat 及 Java 環境,直接再利用這個 image,安裝 Git,製作成新的 image:

目前,我們已經建立一個新的 container,container ID 為 33f15b0c22f2。使用 docker commit 將 container 製作成新的 image:

在 container 內建立好需要的環境後,把修改的 container 建立成一個新 image 檔案,這個 image 已經有增加 Git 測試環境。

4. 上傳至 Docker Hub

我們做了一個新的 image 後,可以上傳到 Docker Hub 或私有的 registry 將 image 分享出去。首先,我們需要到 Docker Hub 網站建立一個自己的 repo:

(1) 點選「Add Repository」,選擇「Respository」

(2) 填寫 Repo 名稱(注意要小寫)與描述,接著點選「Add Repository」

(3) 成功在 Docker Hub 上建立 repo

在 Docker Hub 建立好 repo 後,就可以將 image 使用 docker push 上傳至 Docker Hub:

5. 移除 Image

如果無法刪除可能是有 container 使用了這個 image ,所以要先用 docker rm 移除使用的 container,才能順利移除 image。

撰寫 Dockerfile

在上面了解到能自己製作 image ,並且透過 Docker 分享出去。除了可以利用做好的 image 方便建立一個新的 container 外,也可以撰寫好 Dockerfile 來建立 image 的腳本檔案,再使用 docker build 就能幫我們建立一個 image,一樣可以上傳到 Docker Hub 分享出去!

下面要示範如何使用 Dockerfile 來自動建立 image。跟上面一樣,我們利用 docker.io/consol/tomcat-7.0 來建包含 Git 的環境。

(1). 建立目錄與 Dockerfile

(2). 編輯 Dockerfile

* FROM:以什麼 image 為基底

* MAINTAINER:維護 image 的人

* RUN:在 image 內執行的指令

* ADD:將本機的檔案或遠端的檔案加入到 image 內的目錄,如果是壓縮檔會自動解壓縮。想將本機檔案或目錄複製到 image 可以使用 COPY

(3). 透過 Dockerfile 建立 Image

Dockerfile 就像一個腳本檔案,用 docker build 會根據 Dockerfile 內容來建立新的 image:

Supplement

* Docker User Guide - Managing Data in Containers

* Permission denied on accessing host directory in docker

* VBird - 第十七章、程序管理與 SELinux 初探

Goal

主要介紹 Docker 的實作,會提到以下幾個部分:

在進入實作前,會簡單介紹 Docker 與虛擬化的差異、Docker 上的重要元件,接下來準備的部分會需要安裝 Docker 及先登入 Docker Hub。

簡介 Docker

Docker 是一個開源專案,支援多平台,從筆電到公、私有雲上能進行快速部署輕量、獨立的作業環境。Docker 使用 Linux 核心中的功能,Namespace 及 Control Groups (cgroups) 等,來達到建置獨立的環境及控制 CPU 、Memory 、網路等資源。

專案網址: http://www.docker.com/

Docker Container 與虛擬化的不同

在前面敘述有提到,Docker 能提供建置獨立的環境,但是運作的方式與虛擬化有所差別。

虛擬化

虛擬化通常都是透過在 Host OS 上安裝 hypervisor ,由 hypervisor 來管理不同虛擬主機,每個虛擬主機都需要安裝不同的作業系統。

Docker Container

Docker 提供應用程式在獨立的 container 中執行,這些 container 並不需要像虛擬化一樣額外依附在 hypervisor 或 guest OS 上,是透過 Docker Engine 來進行管理。

Docker 重要元件

在進入如何操作 Docker 前,先介紹 Docker 中的三個主要部分:

* Docker Images

* Docker Containers

* Docker Registries

操作 Docker 方法

Docker 主機上會運行 Docker daemon,也能開啟許多 container。 要對 Docker 進行操作,使用 Docker client,也就是 Docker 指令 (例如:docker pull, docker images ...) ,分別可以藉由:

對主機上的 Docker daemon 進行控制,當然 Docker client 與 Docker daemon 可以是同一台或不同主機上 。

更多 Docker 使用方法, 請參考 Docker Remote API

前置作業

接下來我們先在主機上安裝 Docker:

Ubuntu (doc)

CentOS7 (doc)

Mac OS X, Windows

在 Mac OS X 或 Windows 上需要安裝 Boot2Docker ,因為 Docker Engine 有用到 Linux 的特定功能,所以 Boot2Docker 會用 Virtualbox 建立 Linux VM ,在 Linux VM 上開啟 Docker daemon 由在 Mac OS X 或 Windows 的 Docker client 去操作Linux VM 上 Docker daemon (後面會在詳述)。Boot2Docker 安裝參考:

在前面有提到 Docker Hub 上提供了許多 images 可以使用,先註冊 Docker Hub 帳號,網址:https://hub.docker.com/account/signup/

接著可以利用 docker login 來登入 Docker Hub :

操作 Docker Container

建立一個新的 Container

先來暖身一下,啟動一個 CentOS 6 container,希望能執行指令來顯示今天的日期與時間。 使用 docker run 開啟一個新的 container:

輸出結果的第 1 行到第 5 行是,因為 docker 主機上原來並沒有 centos6 的 image 檔案,所以會先從 Docker Hub 下載。

輸出結果的最後一行就是執行的 date 指令。另外可以發現,如果扣除下載 image 時間,開啟一個 container 只要非常短的時間。

* docker run: 啟動一個新的 container

* centos:centos6

* /bin/date: 開啟 container 後執行的指令

docker run 還有其他參數可以進入 container 的終端機指令互動模式下:

該如何離開 container 的終端機?

1. 輸入 exit 或按 control/Ctrl + D,目前使用的這個 container 就會結束,而下次在開啟的 container 又是一個全新的。

2. control/Ctrl + P,再按 control/Ctrl + Q ,就可以跳離開這個 container 的 tty。

管理 Container

如果只是離開了 container 終端機, container 並沒有關閉或停止執行,所以接下來說明如何來管理 container。

Container ID

每一個 container 都有一個唯一的 CONTAINER ID,在上面的部分,執行 docker run -t -i centos:centos6 bash 開啟新的 container 後,會進入 container 的終端機內:

"d9485c95064b" 就是 CONTAINER ID,之後都是利用此 id 來分別不同的 container。

列出 Container

Docker client 提供了 docker ps 可以查看目前開啟且正在執行的 container

在 Container 中執行指令

離開了 container 的終端機後,並沒有將 container 關掉,可以用 docker exec 在 container 中執行指令,例如下面範例就是再回到 034972c95a2d container 的終端機:

停止 Container

想要讓在執行中的 container 停止,用 docker stop 停止執行中的 container :

開啟停止的 Container

開啟停止的 container 使用命令 docker start:

開啟後會執行一開始建立這個 container 的指令及參數,所以如果建立的時候,並沒有開啟終端機與互動模式,執行完指令後,container 又結束了。

刪除 Container

透過 docker ps -a 可以列出所有建立過且沒被刪除的 container: docker rm 刪除不需要的 container :

Container 內建立額外的掛載點

在 container 建立時,可以再建自訂的掛載點 (與外部檔案系統獨立):

共享主機與 Container 資料

Docker 也提供了可以與主機目錄共享的資料的功能,這在開發的時候蠻好用的,只要把專案目錄掛載到 container 內,就能直接在 container 測試、執行。我們將主機上的/home/aming/Docker/tutorial/ 目錄掛到 container 內的 /app 目錄:

管理 Docker Image

建立一個新的 Container 內有提到,使用 docker run 建立新的 container 時,如果 Docker 發現沒有符合的 image 預設會去 Docker Hub 下載,那我們該如何知道目前有哪些可用的 images ? docker images 可以列出主機內已經存在的 image:

可以看到前面所提到的 image 的「repo 名稱」、「tag 名稱」等資訊。

1. 搜尋 Images

我們可以使用命令 docker search 去搜尋 Docker Hub 上的 Images, 如 'tomcat':

2. 下載 Image

我們使用命令 docker pull 下載搜尋到的 " docker.io/consol/tomcat-7.0" :

想知道目前主機上有哪些 images 可以使用 docker images ,會列出主機內所有的 image:

3. 製作新的 Image

把剛才下載下來的 consol/tomcat-7.0 已經有 Tomcat 及 Java 環境,直接再利用這個 image,安裝 Git,製作成新的 image:

目前,我們已經建立一個新的 container,container ID 為 33f15b0c22f2。使用 docker commit 將 container 製作成新的 image:

在 container 內建立好需要的環境後,把修改的 container 建立成一個新 image 檔案,這個 image 已經有增加 Git 測試環境。

4. 上傳至 Docker Hub

我們做了一個新的 image 後,可以上傳到 Docker Hub 或私有的 registry 將 image 分享出去。首先,我們需要到 Docker Hub 網站建立一個自己的 repo:

(1) 點選「Add Repository」,選擇「Respository」

(2) 填寫 Repo 名稱(注意要小寫)與描述,接著點選「Add Repository」

(3) 成功在 Docker Hub 上建立 repo

在 Docker Hub 建立好 repo 後,就可以將 image 使用 docker push 上傳至 Docker Hub:

5. 移除 Image

如果無法刪除可能是有 container 使用了這個 image ,所以要先用 docker rm 移除使用的 container,才能順利移除 image。

撰寫 Dockerfile

在上面了解到能自己製作 image ,並且透過 Docker 分享出去。除了可以利用做好的 image 方便建立一個新的 container 外,也可以撰寫好 Dockerfile 來建立 image 的腳本檔案,再使用 docker build 就能幫我們建立一個 image,一樣可以上傳到 Docker Hub 分享出去!

下面要示範如何使用 Dockerfile 來自動建立 image。跟上面一樣,我們利用 docker.io/consol/tomcat-7.0 來建包含 Git 的環境。

(1). 建立目錄與 Dockerfile

(2). 編輯 Dockerfile

* FROM:以什麼 image 為基底

* MAINTAINER:維護 image 的人

* RUN:在 image 內執行的指令

* ADD:將本機的檔案或遠端的檔案加入到 image 內的目錄,如果是壓縮檔會自動解壓縮。想將本機檔案或目錄複製到 image 可以使用 COPY

(3). 透過 Dockerfile 建立 Image

Dockerfile 就像一個腳本檔案,用 docker build 會根據 Dockerfile 內容來建立新的 image:

Supplement

* Docker User Guide - Managing Data in Containers

* Permission denied on accessing host directory in docker

* VBird - 第十七章、程序管理與 SELinux 初探